.png)

In our previous article, we explored how a handful of companies have come to control the internet's infrastructure. But why should this concentration of power concern everyday users? The answer lies in a fundamental breakdown of trust.

Imagine discovering that your most trusted advisor has been secretly profiting from decisions made against your best interests. That's essentially what millions of people are realizing about their relationship with technology platforms. While we've been clicking, scrolling, and sharing our lives online, believing these services work for us, a fundamental conflict of interest has been hiding in plain sight.

Recent polling reveals a striking shift in public perception. According to Pew Research, 78% of Americans now believe social media companies wield too much political power -- up from 72% in 2020. Meanwhile, trust in AI companies has declined by 8 points globally since 2019, with 39% of respondents saying technological innovation is "poorly managed" (Edelman Trust Barometer, 2024). This isn't just statistical noise; it's evidence of a growing awareness that Big Tech's interests fundamentally conflict with user welfare.

What is a conflict of interest in tech? A conflict of interest occurs when technology companies' revenue models depend on actions that may harm users, such as maximizing engagement through addictive design features or collecting personal data without clear benefit to the individual user.

The uncomfortable truth is that major technology platforms operate under business models that inherently oppose user interests. When your attention becomes a product to be sold, when your personal data generates billions in advertising revenue you never see, and when algorithms are designed to manipulate rather than inform, the relationship stops being symbiotic and becomes extractive. In this article, you'll discover how fundamental conflicts of interest shape every interaction with major platforms, why traditional trust has broken down, and what this means for our digital future.

Understanding why Big Tech doesn't represent our interests requires examining how these companies actually make money. Despite offering "free" services, the largest technology companies generate hundreds of billions annually through sophisticated data monetization strategies that rarely align with user welfare.

Google exemplifies this fundamental conflict. Over 80% of its revenue comes from advertising, totaling $61 billion in just Q4 2021 alone. To generate this revenue, Google must collect, analyze, and sell access to user data on an unprecedented scale. As security expert Bruce Schneier explains: "Google's business model is to spy on every aspect of their users' lives and to use that information against their interests --- to serve them ads and influence their buying behavior" (Privacy Journal, 2024). The Center for Digital Rights echoes these concerns, documenting how tech companies systematically exploit user data for profit while providing minimal transparency about these practices.

The mechanics are more invasive than most users realize. Google processes billions of search queries daily, tracking not just what people search for, but when, where, and how they interact with results. This data feeds sophisticated algorithms designed to predict and influence purchasing decisions. Users searching for mental health resources might find themselves targeted with expensive therapy services. Someone researching medical conditions could face insurance premium increases if that data reaches the wrong hands.

Facebook's model operates similarly but with even more personal information. The platform tracks user connections, messages, content consumption, and interaction patterns across its family of apps including Instagram and WhatsApp. Internal documents revealed through congressional hearings showed Facebook knew its algorithms promoted divisive content because engagement-driven outrage generates more advertising revenue than healthy discourse.

Mozilla's Privacy Not Included blog has extensively documented these practices, revealing that "the average person's data is worth approximately $1,200 per year to data brokers, yet users see none of this value" (Mozilla Privacy Blog, 2024). Their research shows that major tech platforms collect over 5,000 data points per user annually, creating detailed behavioral profiles that influence everything from loan approvals to job opportunities. As Mozilla's latest Internet Health Report emphasizes: "When privacy becomes a luxury good, digital rights become accessible only to those who can afford them.”

Behind the scenes, tech platforms operate what the Electronic Frontier Foundation calls "the most pervasive surveillance apparatus in human history" through real-time bidding (RTB) systems. The Digital Rights Foundation further warns that this system creates "a digital panopticon where every click, scroll, and pause becomes a data point for commercial exploitation."

Every time you visit a website or open an app, your personal information -- including location, browsing history, and demographic details -- gets broadcast to potentially hundreds of advertising companies within milliseconds.

This process happens billions of times daily without user awareness or consent. Companies bid on your attention based on profiles they've built about your vulnerabilities, interests, and likely purchasing behavior. The data flowing through these systems includes precise location information, device identifiers, and detailed behavioral patterns that can reveal intimate details about your life.

Amazon operates a slightly different but equally concerning model. While less dependent on advertising than Google or Facebook, Amazon leverages its retail dominance to extract data about purchasing patterns, then uses this information to undercut successful third-party sellers by launching competing products. Small businesses become dependent on Amazon's platform for survival, only to find themselves competing against a platform owner with access to all their sales data.

Perhaps nowhere is the conflict of interest more apparent than in how platforms design their interfaces and algorithms. Technology companies employ teams of neuroscientists, behavioral psychologists, and addiction specialists -- not to help users, but to make their products more compelling and harder to abandon.

Consider the case of Sean Parker, Facebook's founding president, who later admitted: "The thought process that went into building these applications was all about: 'How do we consume as much of your time and conscious attention as possible?'" This isn't accidental design; it's intentional manipulation of human psychology for profit.

Features like infinite scroll, variable reward schedules, and push notifications are borrowed directly from casino design principles. These mechanisms trigger dopamine responses in users' brains, creating what researchers call "behavioral addiction" -- compulsive engagement that mirrors substance addiction in its neurochemical effects. The average American now checks their phone 96 times daily and spends over 7 hours staring at screens, much of it driven by design patterns optimized for engagement rather than user benefit.

Social media platforms employ algorithms that prioritize content likely to generate strong emotional responses, particularly anger and outrage, because these emotions drive higher engagement rates. Internal research at Facebook showed the company understood its algorithms could harm users' mental health, particularly teenagers, but chose not to address these issues because reducing engagement would impact advertising revenue. The Center for Humane Technology has extensively documented how these design patterns mirror those used in gambling and other addictive industries, while Access Now reports that such manipulative design disproportionately affects vulnerable populations including minors and individuals with mental health conditions.

Recent congressional disclosures revealed how little priority tech companies actually place on user welfare. Despite public statements about prioritizing safety, most major platforms have actually reduced their trust and safety teams. Twitter/X cut its trust and safety staff from 3,317 employees in 2022 to 2,849 in 2023. Discord reduced its safety team from 90 employees in 2023 to 74 in 2024, despite growing user bases and increased safety concerns (NBC News, 2024).

These cuts occurred even as platforms faced increased regulatory scrutiny and ahead of the 2024 presidential election, when misinformation and harmful content typically surge. The message is clear: when companies must choose between user safety and profit margins, safety gets cut first. Digital rights organization Fight for the Future documented how these staffing reductions directly correlate with increased hate speech and misinformation on platforms, while the Open Technology Institute found that reduced moderation teams led to a 34% increase in harmful content remaining online.

Meanwhile, these same companies spend billions on public relations and lobbying to maintain their image as forces for good. They fund privacy initiatives, sponsor digital literacy programs, and make public commitments to user welfare -- all while operating business models that fundamentally depend on exploiting user data and attention.

The conflict between user interests and platform profits becomes most apparent in how companies deliberately create dependency relationships. Rather than empowering users with tools and choices, major platforms design systems that make leaving difficult or impossible.

Apple exemplifies this through its "walled garden" approach. Once users invest in Apple's ecosystem -- purchasing apps, storing photos in iCloud, and connecting with family through iMessage -- switching to alternative platforms becomes prohibitively expensive and complicated. Apple then leverages this dependency to extract fees from developers and users alike, charging up to 30% on all App Store transactions while providing minimal additional value.

Google operates similar lock-in strategies across its service portfolio. Gmail users accumulate years of email history, Google Drive stores important documents, and Google Photos contains irreplaceable memories. Moving this data to alternative services is technically possible but practically difficult for most users. Google then uses this dependency to introduce new data collection practices, knowing users are unlikely to abandon years of accumulated digital life.

Social networks create perhaps the strongest dependency relationships through what economists call "network effects." Facebook becomes more valuable as more friends join, making individual departure costly even when users disagree with company policies. This dependency allows platforms to gradually erode user protections, confident that the social cost of leaving exceeds the privacy cost of staying.

Tech companies frequently justify questionable practices by claiming they enable innovation and improved services. However, evidence suggests that many "innovations" primarily benefit the companies rather than users. Facial recognition technology is marketed as convenient and secure, but primarily enables more sophisticated tracking and data collection. AI-powered recommendation systems are presented as helpful personalization tools, but function mainly to increase engagement and advertising effectiveness.

When genuinely beneficial innovations do occur, they often get deployed in ways that prioritize company interests over user welfare. Voice assistants like Alexa and Google Home provide useful functionality but also create always-on listening devices in users' homes. Smart home technology offers convenience while establishing unprecedented surveillance capabilities that benefit companies far more than residents.

The innovation narrative also obscures how platform dominance actually stifles genuine innovation. When a few companies control the digital infrastructure that other innovators depend on, they can effectively veto competitive threats. Small developers creating privacy-focused alternatives face insurmountable obstacles accessing users who are locked into dominant platforms.

The conflict between user interests and platform profits extends beyond individual privacy concerns to fundamental questions about democratic governance. When a small number of companies control how billions of people access information, communicate, and form opinions, their business interests inevitably conflict with democratic values.

Platform algorithms don't just reflect public opinion -- they shape it by determining what information billions of people see. Google's search results can influence election outcomes by controlling access to political information. Facebook's news feed algorithms can amplify or suppress social movements based on engagement metrics rather than democratic values. YouTube's recommendation engine has been documented as radicalizing users by suggesting increasingly extreme content that generates higher engagement.

When these platforms operate with minimal democratic oversight, they make decisions about political speech and information access based on corporate policies rather than the public interest. When platforms removed political figures after January 6th (’US Capitol attack’), regardless of one's political views, the incident demonstrated that private companies now exercise powers traditionally reserved for democratic institutions. The Mozilla Foundation warns that this concentration of power over information flows represents "a fundamental threat to democratic discourse." At the same time, Public Knowledge advocates for stronger regulatory frameworks to ensure platform decisions serve public rather than private interests.

The global implications are equally concerning. Platforms control information flows for billions of people worldwide, effectively extending corporate influence into the democratic processes of sovereign nations. When these companies make content moderation decisions based on their business interests rather than local democratic values, they undermine other countries' ability to govern their own information environments.

The good news is that the current system isn't inevitable. Technology can be designed to align company interests with user welfare, but this requires fundamental changes to how digital services are structured and funded.

Subscription-based models offer one alternative, allowing companies to profit by serving users rather than exploiting them. Services like Signal, which operates on donations, demonstrate how communication platforms can prioritize user privacy and security. Wikipedia shows how valuable digital resources can be maintained through community support rather than surveillance capitalism.

Decentralized technologies present another path forward. Blockchain-based systems, peer-to-peer networks, and open-source alternatives can reduce dependence on centralized platforms while giving users greater control over their data and digital experiences. The European Union's Digital Markets Act represents regulatory approaches to forcing interoperability and preventing lock-in strategies.

Most promising are emerging platforms that make user empowerment rather than data extraction their core business model. These services profit by helping users manage, control, and even monetize their own data rather than secretly harvesting it. The technology exists to build digital infrastructure that serves human interests -- what's needed is the collective will to demand better.

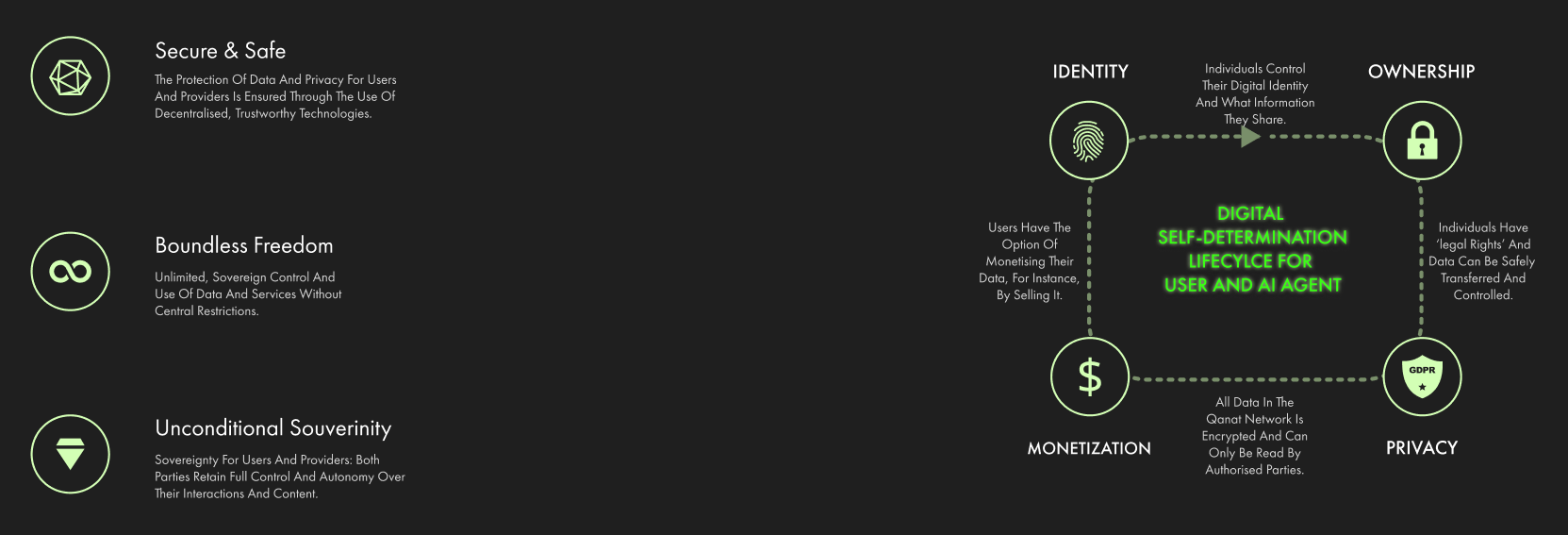

This is where initiatives like QANAT become crucial. By creating technology that returns data sovereignty to individuals and communities, we can begin building digital infrastructure aligned with user interests rather than extractive business models. The vision involves platforms that transparently serve users, where privacy is default rather than premium, and where individuals maintain control over their digital lives.

The conflict between Big Tech's interests and user welfare isn't a minor side effect of technological progress -it's a fundamental design choice. Current platforms prioritize shareholder profits over user well-being because that's how they're structured to operate. But technology can be built differently, with business models that succeed by empowering rather than exploiting users. The choice between digital freedom and digital exploitation remains ours to make, but only if we recognize the conflicts of interest that currently define our digital lives and demand alternatives that truly serve human interests.

QANAT represents one vision for what user-aligned technology could look like -platforms designed from the ground up to serve individuals and communities rather than extract value from them. We can build technology that enables human flourishing rather than corporate exploitation. The future of our digital lives depends on our willingness to demand and support alternatives that put user interests first. To truly solve the digital trust crisis, we must build secure, user-owned data ecosystems that can scale. The following image provides an insight into QANAT’s approach.

If you want to dive deeper, QANAT shows you how decentralized identity systems can align technology with user interests, creating sustainable alternatives where privacy and user empowerment drive platform success rather than undermine it.